Junyi Zhu

Senior Applied Scientist at Microsoft UK.

I focus on building AI that actually understands people, whether that’s making their workday easier or their personal life more creative.

Currently, I drive post-training efforts for Microsoft 365 Copilot, where I focus on building models that possess deep contextual awareness of workplace users, their roles, and their organizations.

Previously, I drove innovation at Samsung Research, prototyping advanced AI features for the next generation of smartphones, spanning everything from core productivity to digital entertainment.

I completed my PhD studies at KU Leuven in Belgium, under the guidance of Prof. Matthew Blaschko. During my PhD, I have explored and contributed to many domains in AI, including image generation models, large language models, distributed learning and privacy-preserving machine learning.

Before my PhD, I earned my Master’s degree from the Karlsruhe Institute of Technology in Germany, specializing in autonomous driving. During my Master’s program, I worked on several research projects using AI to solve control and perception tasks of autonomous driving at Institute of Measurement and Control Systems and Research Center for Information Technology in Karlsruhe.

If you are interested in research collaboration, feel free to reach out!😊

news

| Sep 18, 2025 | Our paper Latent Zoning Network: A Unified Principle for Generative Modeling, Representation Learning, and Classification has been accepted at NeurIPS 2025. |

|---|---|

| Sep 14, 2025 | I am honored to serve as an Area Chair for CVPR 2026. I look forward to contributing to the community and supporting the review process in this role. |

| Sep 05, 2025 | Our paper Guided Model Merging for Hybrid Data Learning: Leveraging Centralized Data to Refine Decentralized Models has been accepted at WACV 2026 (Round 1, accceptance rate 6.3%). |

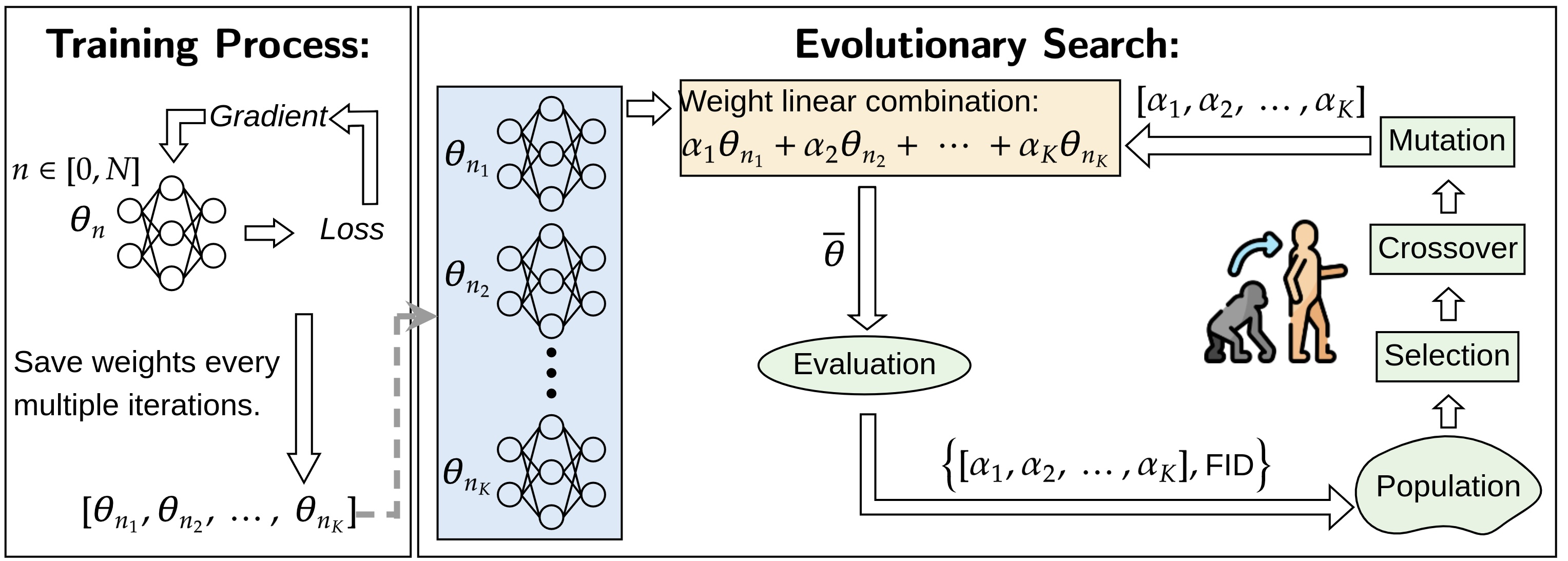

| Mar 14, 2025 | Our paper Linear Combination of Saved Checkpoints Makes Consistency and Diffusion Models Better has been accepted at ICLR 2025. |

selected publications

- ICLR

Linear Combination of Saved Checkpoints Makes Consistency and Diffusion Models Better2025* = Co-first authors

Linear Combination of Saved Checkpoints Makes Consistency and Diffusion Models Better2025* = Co-first authors - EMNLP Findings

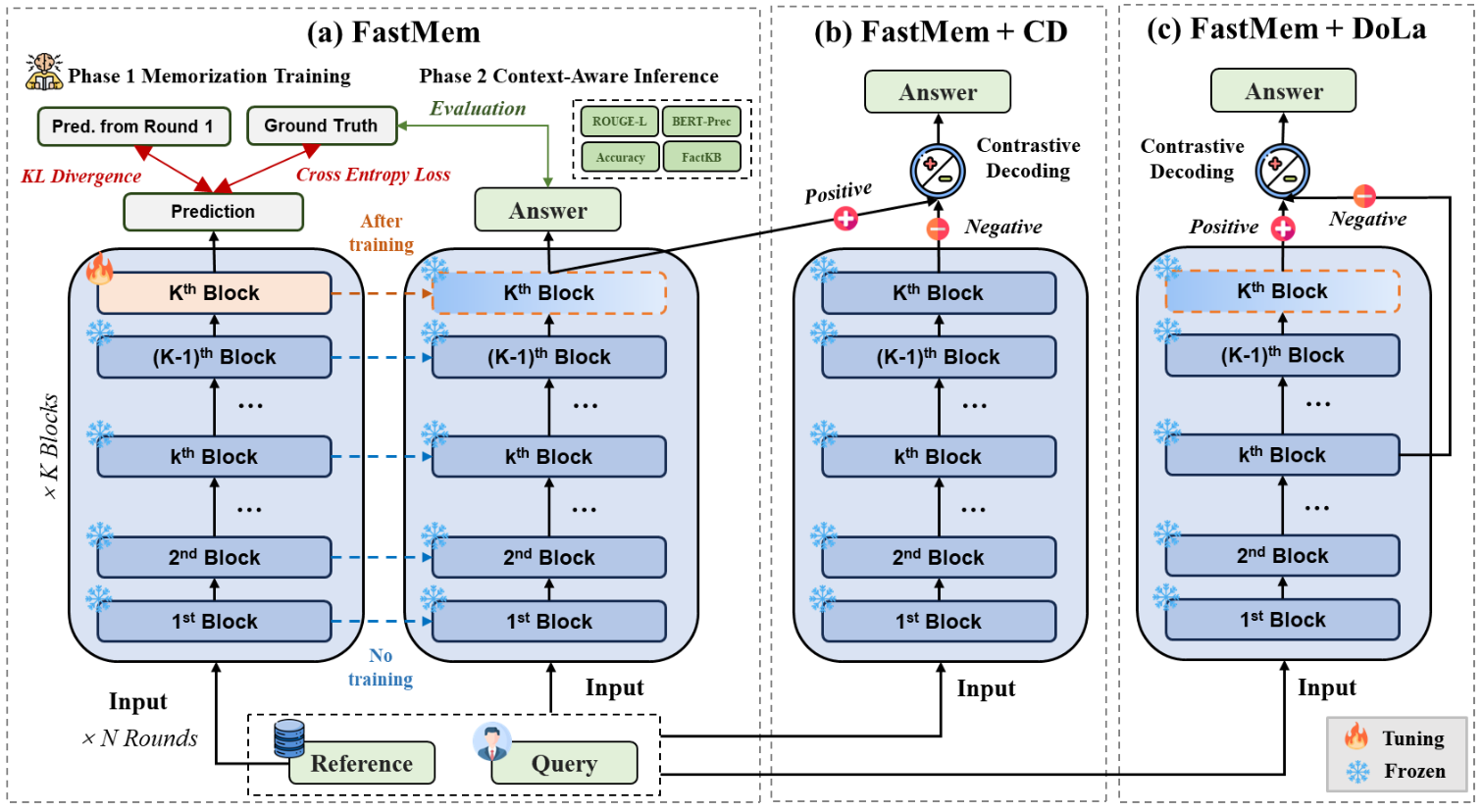

FastMem: Fast Memorization of Prompt Improves Context Awareness of Large Language Models2024* = Co-first authors

FastMem: Fast Memorization of Prompt Improves Context Awareness of Large Language Models2024* = Co-first authors - CVPR

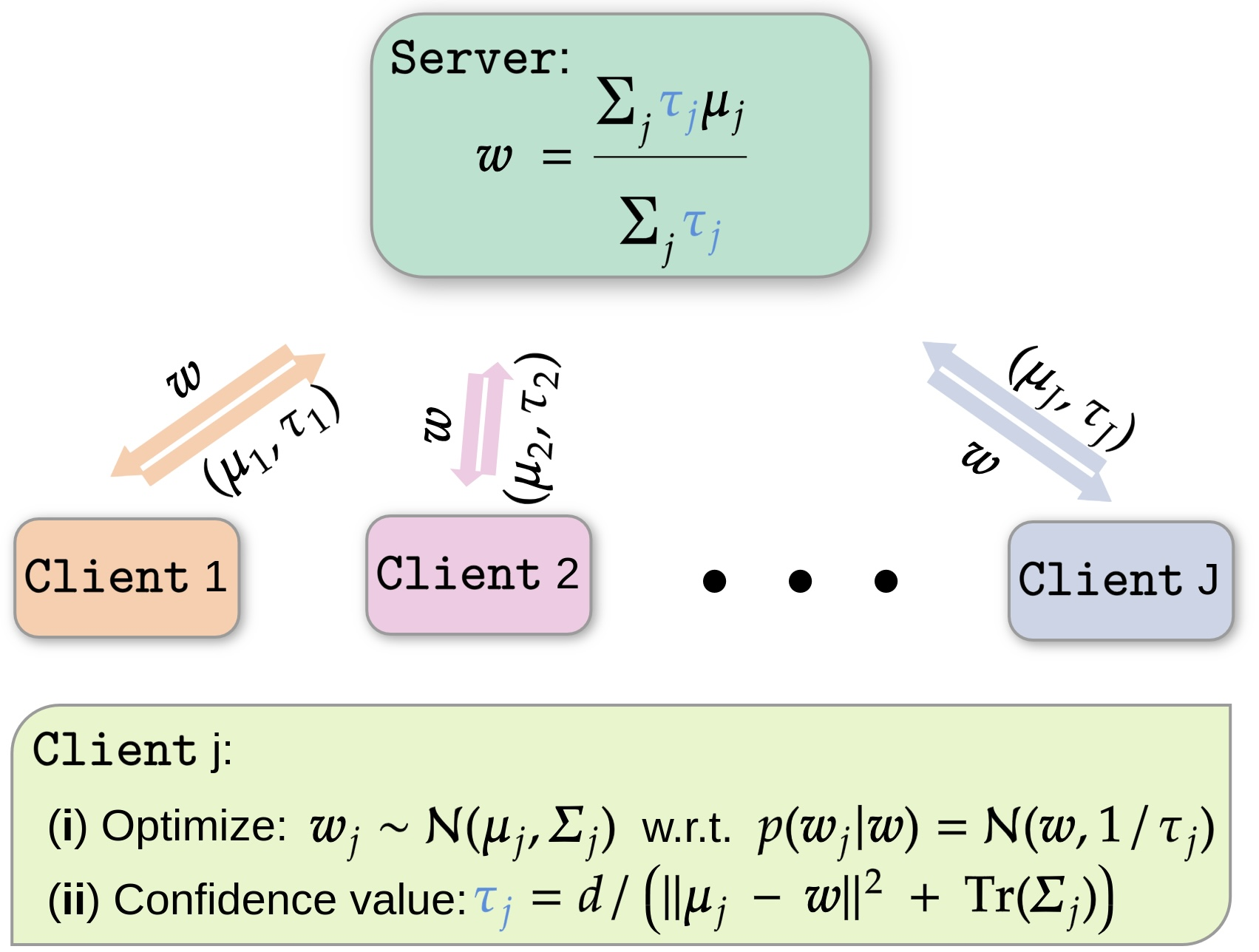

Confidence-aware Personalized Federated Learning via Variational Expectation Maximization2023* = Co-first authors

Confidence-aware Personalized Federated Learning via Variational Expectation Maximization2023* = Co-first authors - ICML

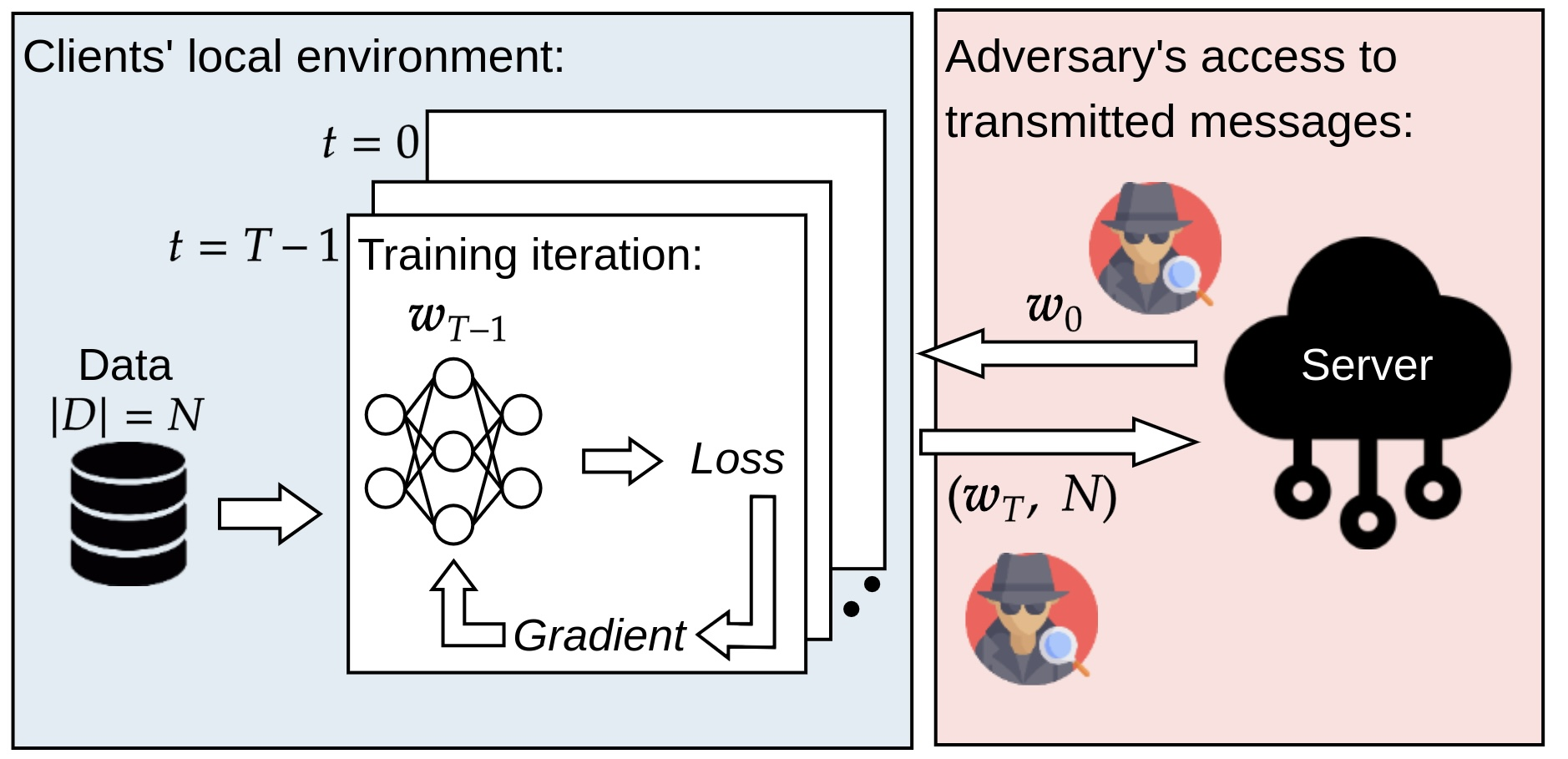

Surrogate Model Extension (SME): A Fast and Accurate Weight Update Attack on Federated Learning2023

Surrogate Model Extension (SME): A Fast and Accurate Weight Update Attack on Federated Learning2023 - TMLR

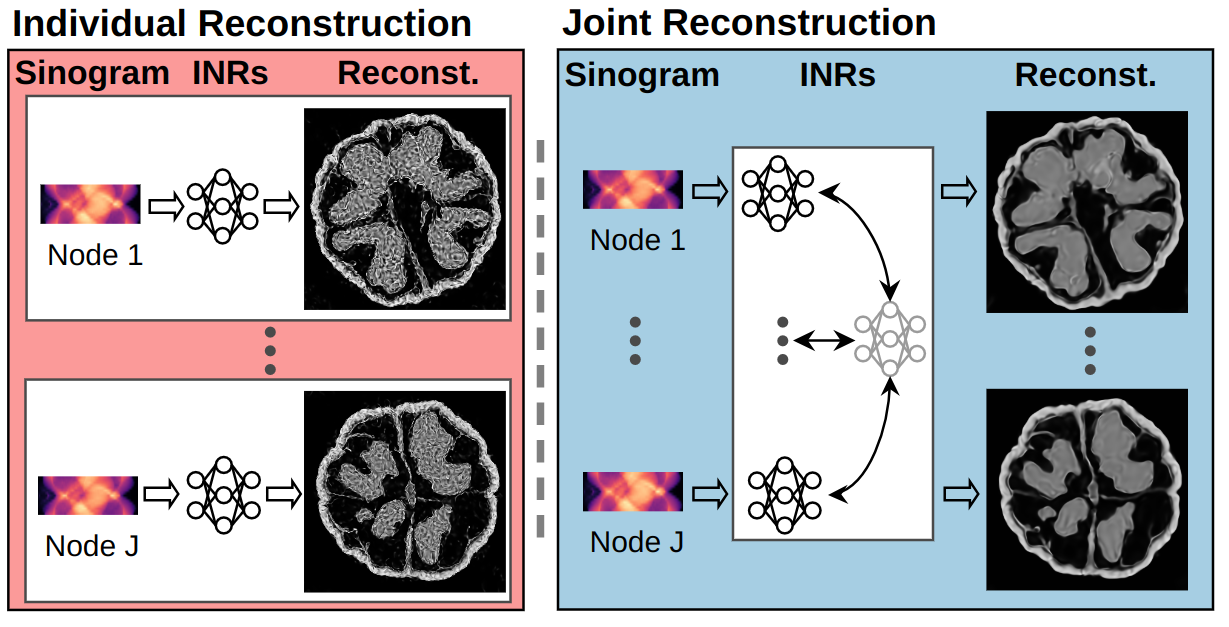

Implicit Neural Representations for Robust Joint Sparse-View CT Reconstruction2024* = Co-first authors

Implicit Neural Representations for Robust Joint Sparse-View CT Reconstruction2024* = Co-first authors